The rising tide of Generative AI, pioneered by OpenAI’s ChatGPT, reshapes the data center landscape. This swift evolution anticipates a whopping $500 Billion Capex by 2027. However, the burgeoning demands of large language models prompt a significant transformation in data center architecture, reminiscent of infrastructures observed in the high-performance computing sector.

AI’s Rising Power Demands: Immersion Cooling Redefines Operations

The intensifying AI workloads call for elevated computing power and speed, elevating rack power densities, and revolutionizing Data Center Physical Infrastructure (DCPI). While facility power remains constant, transformative shifts emerge in the data center’s white space.

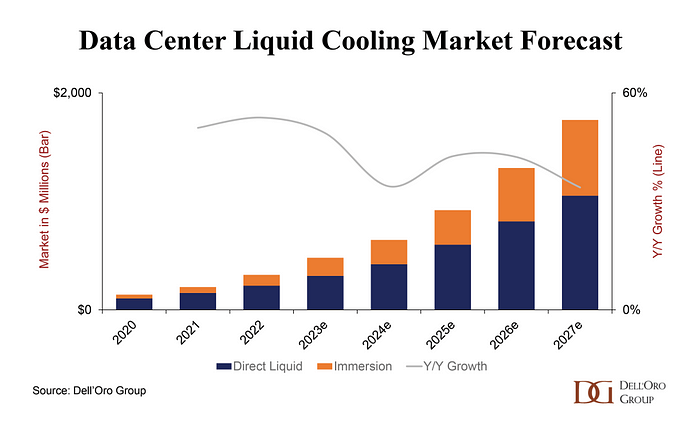

With AI hardware consuming significant power, higher-rated rack PDUs assume a pivotal role. Intelligent rack PDUs, facilitating remote power management despite elevated costs, become indispensable. Simultaneously, the significance of liquid cooling, notably immersion cooling, intensifies. As next-gen CPUs and GPUs fuel AI workloads, managing their immense heat becomes imperative. Adoption of liquid cooling, including immersion cooling, has been growing, poised to accelerate alongside AI infrastructure deployment.

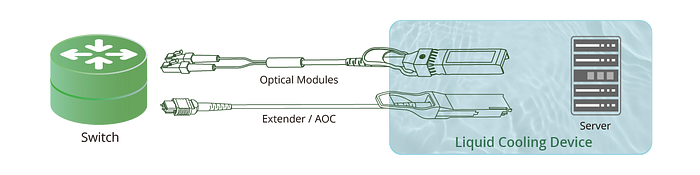

Immersion cooling involves submerging IT equipment in dielectric fluids, dissipating heat efficiently while enhancing performance and energy efficiency. This technology minimizes hardware inefficiencies that air cooling might incur. However, despite the potential, immediate influence of generative AI on liquid cooling might be limited. It remains plausible to deploy current-gen IT infrastructure with air cooling, albeit at the cost of hardware efficiency and utilization.

Some end-users are retrofitting existing facilities with closed-loop air-assisted liquid cooling systems, such as rear door heat exchangers (RDHx) or direct liquid cooling. This approach allows data center operators to leverage liquid cooling’s benefits without extensive facility redesigns. However, to realize optimal efficiency at scale for AI hardware, purpose-built liquid-cooled facilities are essential.

While the current focus on liquid cooling might fully manifest in deployments by 2025, the forecasted surge indicates liquid cooling revenues nearing $2 Billion by 2027.

The Era of Power Challenges: Reshaping Data Center Growth

AI’s expanding footprint drives data center expansion, with the DCPI market projected to witness a 10% CAGR rise to 2027. Yet, power availability remains a concern. Supply chain disruptions led to extended lead times during the pandemic, now easing. However, the surge in AI-driven energy demands outpaces the available power supply.

This imbalance compels data center providers to explore inventive solutions like the ‘Bring Your Own Power’ model, aiming to sustain growth amidst the AI workload surge. The transformative impact of AI on DCPI redefines its role in enabling growth, defining performance metrics, managing costs, and advancing sustainability initiatives.

Neglecting to align DCPI requirements with AI strategies could leave advanced hardware without a suitable power source, undermining overall efficiency and potential gains.

Reference come’s from Dell’Oro Group “AI is Ushering in a New Era for Data Center Physical Infrastructure”