The NVIDIA DGX H100 is equipped with eight single-port ConnectX-7 network cards supporting NDR 400Gb/s bandwidth and two dual-port Bluefield-3 DPUs (200Gb/s) supporting IB/ Ethernet networks. The appearance is shown in the following figure.

The DGX H100 is equipped with four QSFP56 ports for storage networks and In-Band management networks. In addition, there is one 10G Ethernet port for Remote Host OS Management and one 1G Ethernet port for Remote System Management.

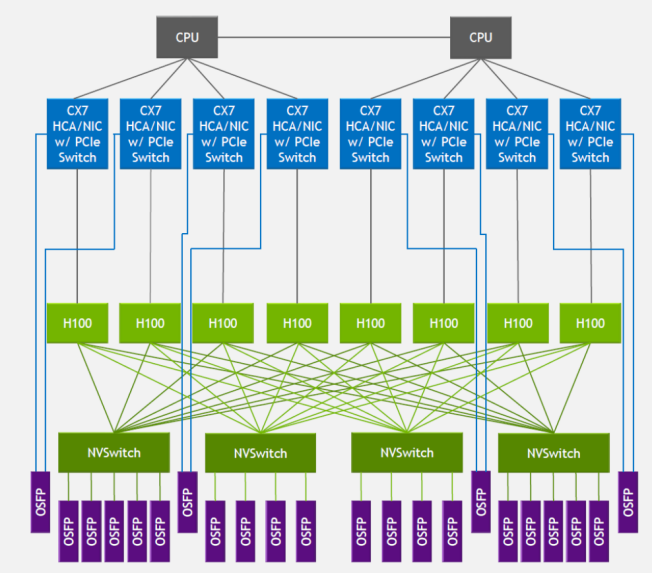

From the internal network topology of the server in the following figure, there are four OSFP ports for computing network connections (the four in purple), and the blue square is the network card, which acts as both a network card and a PCIe Switch extension function, acting as a bridge between the CPU and the GPU.

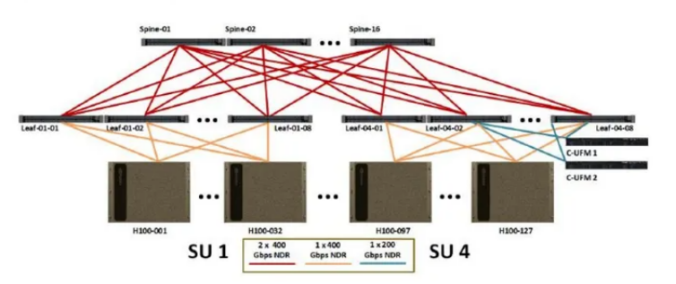

If Nvidia SuperPOD’s NVLink cluster interconnection solution is adopted, 32 H100s are interconnected through an external NVLink switch. The eight Gpus in the server are connected to four NVSwitch modules. Each NVSwitch module corresponds to four or five OSFP optical modules, for a total of 18 OSfps. The OSfps are connected to 18 external NVLink switches. (At present, the H100 on the market is basically not equipped with these 18 OSFP modules) This article does not discuss the NVLink networking mode, and focuses on the IB networking mode. According to the NVIDIA reference design document, in the DGX H100 server cluster, each of the 32 DGX H100 units is formed into a SU, each of the four DGX H100 units is placed on a separate rack (estimated to be close to 40KW per rack), and the various types of switches are placed on two separate racks. Therefore, each SU contains 10 racks (8 for servers and 2 for switches). Only the Spine-Leaf two-layer switch (Mellanox QM9700) is needed to compute the network, and the network topology is shown in the figure below.

Switch Usage: Each 32 DGX H100 in the cluster constitutes one SU (each SU has eight Leaf switches), and each cluster of 128 H100 servers has four SU, for a total of 32 Leaf switches. Each DGX H100 in the SU needs to be connected to all eight Leaf switches. Since each server only has four 800G OSFP ports for computing network connections, after each port is connected to the 800G optical module, expand the OSFP port to two QSFP ports by expanding the port. Each DGX H100 is connected to eight Leaf switches. Each Leaf switch has 16 uplink ports connected to 16 Spine switches.

Optical module usage: A 400 Gbit/s optical module is required for the downstream port of a Leaf switch. The required value is 32 x 8 x 4. The uplink ports on Leaf switches use 800 Gbit/s optical modules. The required value is 16 x 8 x 4. The downstream ports of the Spine switch use 800 Gbit/s optical modules. Therefore, in a cluster of 128 H800 servers, the computing network uses 1536 800G optical modules and 1024 400G optical modules.