With the continuous development of AI technology and related applications, the importance of large models, big data and AI computing power has become increasingly prominent in the development of AI. Large models and data sets form the software foundation for AI research, and AI computing power is the critical infrastructure. In this article, we will explore the impact of AI developments on data center network architecture.

Fat-Tree Data Center Architecture

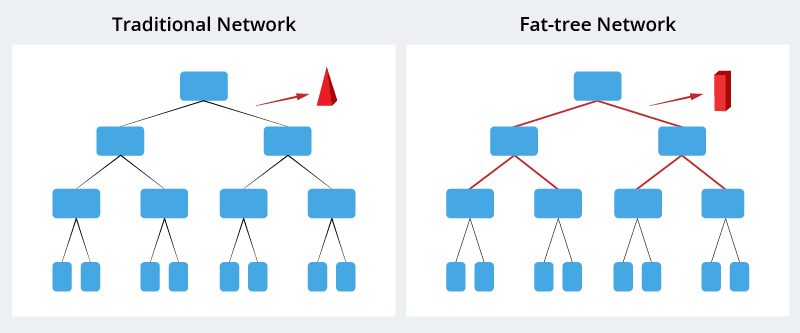

With the wide application of AI large model training in various industries, traditional networks cannot meet the bandwidth and delay requirements of large model cluster training. Large-model distributed training requires communication between Gpus, and its traffic patterns are different from traditional cloud computing, which increases east-west traffic in AI/ML data centers. Short and high volumes of AI data lead to reduced network latency and training performance in traditional network architectures. Therefore, in order to meet the short-term and high-volume data processing needs, the emergence of Fat-Tree network is inevitable.

In the traditional tree network topology, the bandwidth is aggregated layer by layer, and the network bandwidth at the bottom of the tree is much smaller than the total bandwidth of all leaf nodes. In contrast, a Fat-Tree looks like a real tree, with thicker branches near the roots. As a result, the network bandwidth gradually increases from leaf to root, improving network efficiency and speeding up the training process. This is the basic premise of the Fat-Tree architecture to enable a non-blocking network.

Data Center Network Evolution

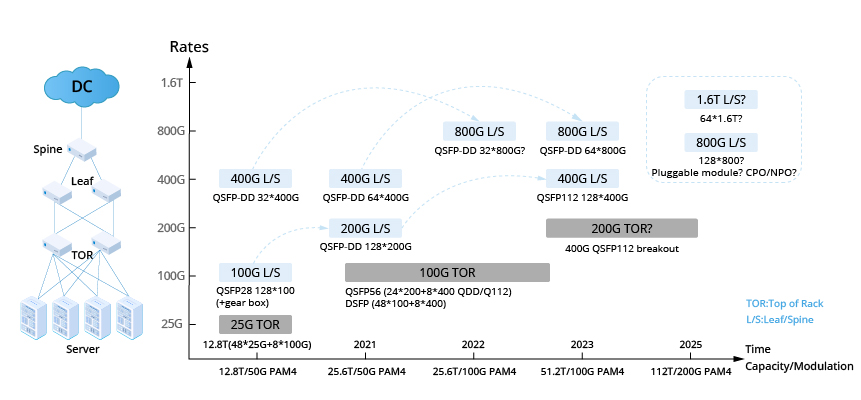

As the complexity of data center applications continues to increase, so does the need for network speed. From 1G, 10G and 25G in the past to 100G in widespread use today, the rate of data center network upgrading and evolution is accelerating. However, in the face of large-scale AI workloads, 400G and 800G transfer rates have become the next key process in the evolution of data center networks.

AI data centers drive the development of 400G/800G optical transceivers.

Large-scale data processing requirements

The training and reasoning of AI algorithms require large data sets, so data centers must be able to efficiently handle the transfer of large amounts of data. The advent of 800G optical modules, which provide greater bandwidth, helps solve this problem. An upgraded data center network architecture typically consists of two tiers, extending from switches to servers, with 400G as the underlying layer. Therefore, upgrading to 800G will also drive demand growth for 400G.

Real-time demand

In some AI application scenarios, the need for real-time data processing is critical. For example, in autonomous driving systems, the massive amounts of data generated by sensors need to be transmitted and processed quickly, and optimizing system latency becomes a key factor in ensuring timely response. The introduction of high-speed optical modules quickly meets these real-time requirements by reducing the latency of data transmission and processing, thereby improving the responsiveness of the system.

Multitasking concurrency

Modern AI data centers often need to handle multiple tasks simultaneously, including activities such as image recognition and natural language processing. Support for this multitasking workload can be enhanced with high-speed 800G/400G optical modules.

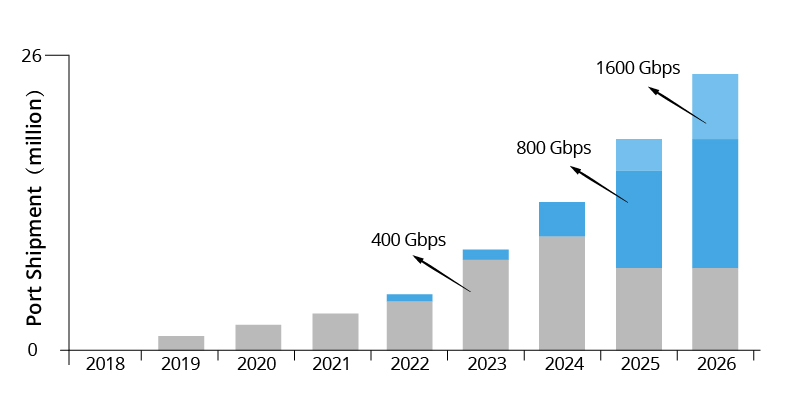

The 400G/800G optical module market has broad prospects

At present, the demand for 400G and 800G optical modules has not yet seen significant growth, but it is expected to increase significantly in 2024, driven by the growing demand for AI computing. According to Dell’Oro, the demand for 400G optical modules will increase in 2024. The increasing demand for high rate data transmission driven by AI, big data and cloud computing is expected to accelerate the growth of the 800G optical module market. This trend highlights the bright future of the 800G/400G optical module market, which will gradually increase in response to the changing needs of advanced computing applications.

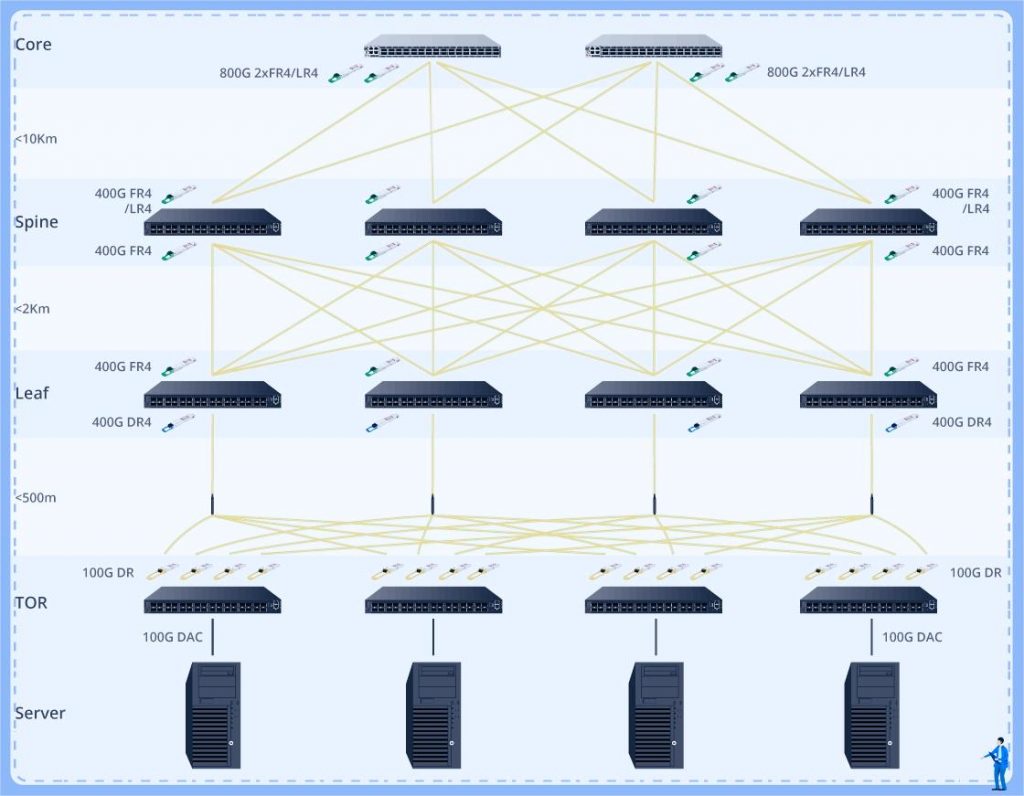

400G/800G optical module solution for typical data centers

This diagram shows the solution for upgrading to 800G data centers. The QDD-FR4-400G optical module forms a high-bandwidth link between the MSN4410-WS2FC switch in the backbone layer and the high-performance 800G switch in the core layer, and runs at a 400G interface rate.

Because these optical modules are packaged in a high-density QSFP-DD package, they can be deployed in high-density configurations. This increases transmission capacity and provides greater bandwidth rates. In addition, by employing PAM4 modulation and retiming technologies, these optical modules achieve faster data transfer rates while significantly reducing latency and improving overall system performance.

800G/400G optical module new era

With the growing demand for faster and more efficient data transmission, the era of 800G/400G optical modules has fully arrived. Favored for their outstanding bandwidth capabilities, advances in LPO technology, and economic benefits, these optical modules are expected to transform the AI field and redefine the data center. Using high-speed optical modules, fully developing and training AI is no longer just an idea.